Morality, thy discount is hyperbolic

One well known failure mode of Utilitarian ethics is a thing called a "utility monster" — for any value of "benefit" and "suffering", it's possible to postulate an entity (Bob) and a course of action (The Plan) where Bob receives so much benefit that everyone else can suffer arbitrarily great pain and yet you "should" still do The Plan.

That this can happen is often used as a reason to not be a Utilitarian. Never mind that there are no known real examples — when something is paraded as a logical universal ethical truth, it's not allowed to even have theoretical problems, for much the same reason that God doesn't need to actually "microwave a burrito so hot that even he can't eat it" for the mere possibility to be a proof that no god is capable of being all-powerful.

I have previously suggested a way to limit this — normalisation — but that was obviously not good enough for a group. What I was looking for then was a way to combine multiple entities in a sensible fashion, and now I've found one already existed: hyperbolic discounting.

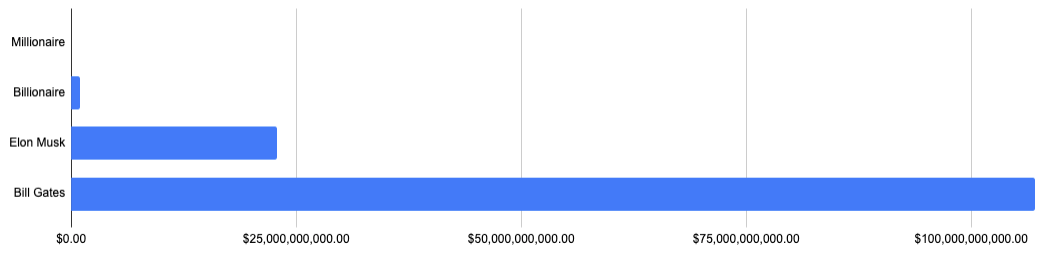

Hyperbolic discounting is how we all naturally think about the future: the further into the future a reward is, the less important we regard it. For example, if I ask "would you rather have $15 immediately; or $30 after three months; or $60 after one year; or $100 after three years?" most people find those options equally desirable, even though nobody's expecting the US dollar to lose 85% of its value in just three years.Most people do a similar thing with large numbers, although logarithmic rather than hyperbolic. There's a cliché with presenters describing how big some number X is by saying X-seconds is a certain number of years and acting like it's surprising that "while a billion seconds is 30 years, a trillion is 30 thousand years". (Personally I am so used to this truth that it feels weird that they even need to say it).

So, what's the point of this? Well, one of the ways the Utility Monster makes a certain category of nerd look like an arse is the long-term future of humanity. The sort of person who (mea culpa) worries about X-risks (eXtinction risks), and says "don't worry about climate change, worry about non-aligned AI!" to an audience filled with people who very much worry about climate change induced extinction and think that AI can't possibly be a real issue when Alexa can't even figure out which room's lightbulb it's supposed to switch on or off.

To put it in concrete terms: If your idea of "long term planning" means you are interested in star-lifting as way to extend the lifetime of the sun from a few billion years to a few tens of trillions, and you intend to help expand to fill the local supercluster with like-minded people, and your idea of "person" includes a mind running on a computer that's as power-efficient as a human brain and effectively immortal, then you're talking about giving 5*10^42 people a multi-trillion year lifespan.

If you're talking about giving 5*10^42 people a multi-trillion year lifespan, you will look like a massive arsehole if you say, for example, that "climate change killing 7 billion people in the next century is a small price to pay for making sure we have an aligned AI to help us with the next bit" — the observation that a non-aligned AI is likely to irreversibly destroy everything it's not explicitly trying to preserve isn't going to change anyone's mind, because even if you get past the inferential distance that means most people hearing about this say "off switch!" (both as a solution to the AI and to whichever channel you're broadcasting on), at scales like this, utilitarianism feels wrong.

So, where does hyperbolic discounting come in?

We naturally discount the future as a "might not happen". This is good. No matter how certain we think we are on paper, there is always the risk of an unknown-unknown. This risk doesn't go away with more evidence, either — the classic illustration of the problem of induction is a turkey who observes that every morning they are fed by their farmer and so decides that the farmer has their best interests at heart; the longer this goes on for, the more certain they are of this, yet each day brings them closer to being slaughtered for thanksgiving. The currently-known equivalents in physics would be things like false vacuums or brane collisions triggering a new big bang or Boltzmann brains — but only from the point of view of people who have never heard of them before.

Because we don't know the future, we should discount it. There are not 5*10^42 people. There might never be 5*10^42 people. To the extent that there might be, they might turn out to all be Literally Worse Than Hitler. Sure, there's a chance of human flourishing on a scale that makes Heaven as described in the Bible seem worthless in comparison — and before you're tempted to point out that Biblical heaven is supposed to be eternal, which is infinitely longer than any number of trillions of years: that counter-argument presumes Utilitarian logic and therefore doesn't apply — but the further away those people and that outcome is from you, the less weight you should put on them really becoming and it really happening.

Instead: Concentrate on the here-and-now. Concentrate on ending poverty. Fight climate change. Save endangered species. Campaign for nuclear disarmament and peaceful dispute resolution. Not because there can't be 5*10^42 people in our future light cone, but because we can falter and fail at any and every step on the way from here.

Original post: https://kitsunesoftware.wordpress.com/2020/01/08/morality-thy-discount-is-hyperbolic/

Original post timestamp: Wed, 08 Jan 2020 21:46:38 +0000

Tags: black swan, discounting, future of humanity, long-term future, outside context problem, Technological Singularity, Utilitarianism, utility monster, x-risk

Categories: Philosophy