Arguments, hill climbing, the wisdom of the crowds

You ever had an argument which seems to go nowhere, where both sides act like their position is self-evident and obvious, that the other person "is clearly being deliberately obtuse"? I hope that's common, and not just one of my personal oddities. Ahem.

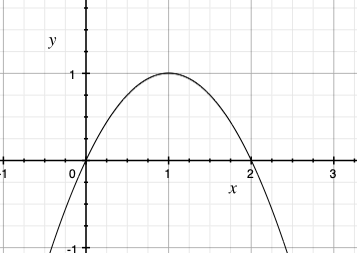

In the current world of machine learning (yes these two things are connected), one of the well-known methods is a thing called "hill climbing". You have some relationship between two things, and you want to learn the relationship between them — the function — so that you can maximise the thing you want to have more of (fun), or minimise the thing you want less of (pain). This function might have any shape and might represent any relationship. If you were to plot the whole thing on graph paper, it would be easy to see where the best place was:

But in the real world, data collection is expensive and you can't just plot a graph and look at the whole thing and call it a day. When you can't look at all possible solutions, then instead of merely guessing where the best might be, you might want to follow a standard method, and this is where "hill climbing" comes in. With the hill climbing method, you start with some measurement, then you measure what's around that point, and take a "step" in the "best" (for the thing you care about) direction. Say you start at x = 0 on the graph above, you look at x = 0.1 and x = -0.1, you see that x = 0.1 is best and use that as your next measurement. If you imagine the graph is a hill, you are climbing to the top of that hill (sometimes the description is reversed and you're trying to get to the bottom of the valley, but that doesn't change anything important).

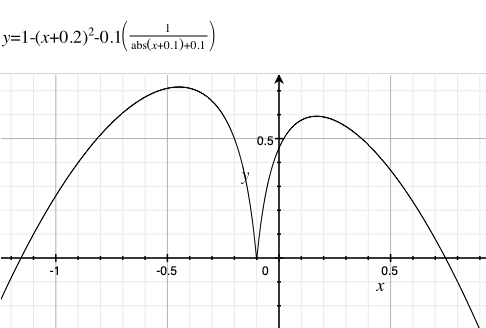

There's a problem with this, though. Hills don't usually look so simple, so let's make the graph more complicated:

In this case, starting hill climbing at x = 0 will always give you the smaller of the two peaks. And of course in the real world things are even more complicated than this, with countless peaks and valleys, and if your goal is Everest, you will definitely fail to reach that peak using this algorithm if you start anywhere in the Americas, Australia, Antarctica, or any island.

There are a lot of different ways to upgrade hill climbing to avoid this problem. One in particular is called either "random-restart hill climbing" or "shotgun hill climbing". It's a simple, and surprisingly effective, method: do normal hill climbing many times with random starting values, pick the best.

In a flash of insight this morning while pouring milk into my cereal, I realised that this could be an explanation for both how those annoying arguments happen (because we're on different metaphorical hills in our models of reality or language) and may also contribute to why the wisdom of the crowds can be so effective (because everyone's on a random hill and the crowd can pick the best).

This doesn't diminish the usual problems with wisdom of the crowd — when there is an actual right answer, experts do better and everyone else just dilutes rather than reinforces; if the crowd is allowed to deliberate rather than all voting independently, then the crowd follows a charismatic leader and you get groupthink — and it comes with a testable prediction: things which can help you randomise-and-restart can help you make better models of reality, so long as you can ignore the old mental model right up until it is time to compare the new and old models:

- Every language you learn should make it easier to learn more; and 5 hours of nothing-but-$new_language should be better for learning the structure of the language than 10 minutes of $new_language every day for a month, because 10 minute blocks are short enough to keep thinking in $old_language about $new_language.

- Any psychotropic substance which lets you restart from scratch but without deleting old memories (temporarily suppressing them is fine) would be a nootropic.

- If it's possible to delete a cognitive anchor, doing so would lead to modelling reality better.

My German is nowhere near as good as I want it to be, so I'm going to try #1 for a bit, the intention is 5 hours once per month, which is going to be in addition to all my various 10 minute per day vocabulary memorisation apps (memorising doesn't require understanding, and I need both).

Original post timestamp: Mon, 27 Dec 2021 11:48:06 +0000

Tags: AI, arguments, hill climbing, machine learning, theory of mind, wisdom of the crowds

Categories: Minds, Philosophy, Psychology